The internet has always been envisioned as a platform to democratise public spaces by bringing more voices to the table and transform how interactions or businesses are conducted. The rapid adoption of internet-based technologies, however, has not just created opportunities but has also posed challenges requiring urgent and strategic resolution.

With vices such as Child Sexual Abuse Material (CSAM) spewing across online platforms, the calls for diluting safe harbour protections for internet intermediaries alongside mandating proactive monitoring and automated blocking of user-generated content has been gaining traction. Such actions, though well-intended, lead to deleterious implications on the fundamental right to free speech and privacy of the users. Accordingly, there is a need for evaluating their reasonableness and feasibility in a Constitutional democracy like India. After all, protecting the fundamental rights of the people is critical for preserving democracy and rule of law.

Safe harbour: The hallmark of Indian democracy

The Indian ‘Safe Harbour’ regime as envisaged under Section 79 of the IT Act is modelled on America’s Section 230 of the Communications Decency Act. The overarching idea behind this concept is to immunise online platforms against liability arising out of third party content on their platform when they have no ‘actual knowledge’ of its unlawfulness. This protection accorded to platforms like twitter or snapchat is crucial for building a free and liberal internet ecosystem where people can voice their opinion freely without any fear of proactive monitoring or pre-censorship.

The importance of preserving safe harbour to promote online free speech was reiterated in the case of Shreya Singhal v. Union of India (2015). However, the recent threats to child safety due to the rapid proliferation of child sexual abuse material on the internet has led the Indian government to contemplate on limiting the ambit of safe harbour protection. The Draft Information Technology (Intermediary Guidelines) Rules 2018 [The Draft Rules 2018] that seek to impose criminal liability upon intermediaries if they fail to proactively monitor and automatically block harmful user-generated content is evidence to this fact. Such measures are concerning as they empower the intermediaries to restrict Freedom of Speech and Expression and Right to Privacy of the citizens, something that only the judiciary is Constitutionally authorised to do.

No Safe Harbour No Safety: The American Experience

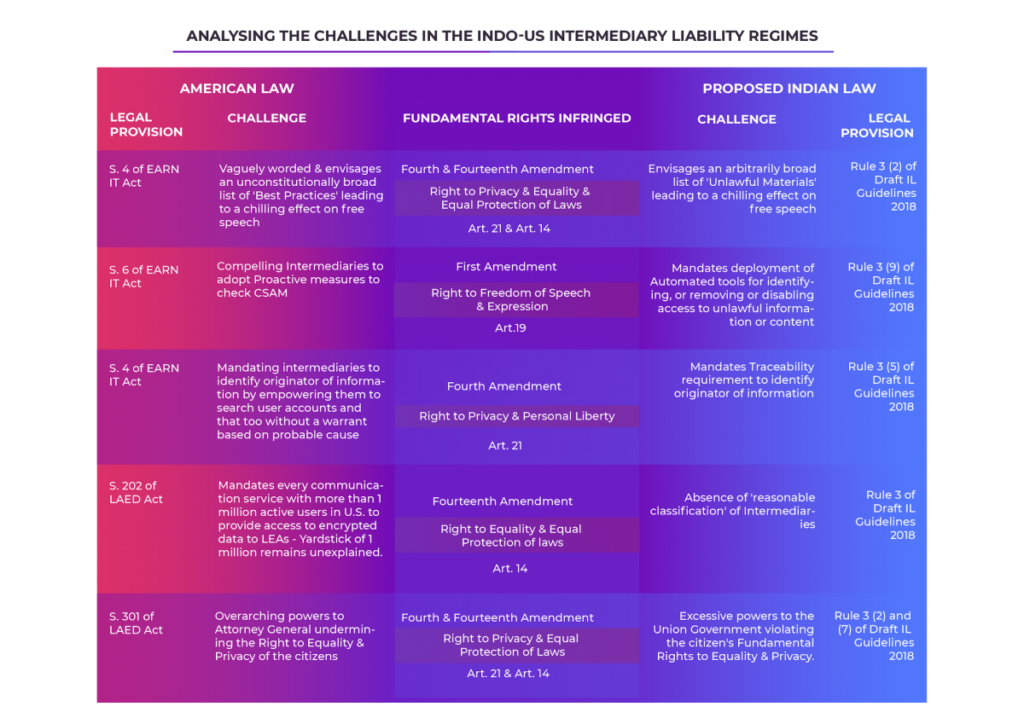

Recently, the Delhi-based technology policy think-tank, The Dialogue, conducted a study involving extensive analysis of the American Safe Harbour regime right from the inception of Section 230 CDA to the recently enacted ‘The Stop Enabling Sex Traffickers Act (SESTA) and Allow States and Victims to Fight Online Sex Trafficking Act (FOSTA)’ (FOSTA- SESTA) legislation and the proposed ‘Eliminating Abusive and Rampant Neglect of Interactive Technologies Act’ [EARN IT] and Lawful Access to Encrypted Data Act [LAED] Acts. An impact assessment of the socio-economic ramifications of these legislations has proven that they create more challenges than they seek to resolve.

Studies have shown that the FOSTA-SESTA legislations that intended to protect sex workers have in fact resulted in endangering their lives more. Likewise, there are galling concerns that the proposed EARN IT and LAED Acts, which intend to introduce traceability (vulnerabilities) on encrypted platforms to catch the proliferators of CSAM, will also fail in fulfilling their objective like FOSTA-SESTA.

Decrypting recent developments in Indian Safe Harbour regime

Section 79 of the IT Act envisages an open and inclusive internet ecosystem while also reinforcing necessary checks in the interest for user safety through appropriate legislation. Rule 11 of the recently notified POCSO Rules under the POCSO Act, which criminalises everything related to CSAM, is apt evidence of this fact. However, despite the existence of adequate regulatory mechanisms, the growing appetite of the Indian State to impose stricter regulations on intermediaries to cater to the challenge of CSAM is concerning.

Post the Shreya Singhal decision, the judiciary in India has shown a retrogressive trend by attempting to dilute safe harbour to promote online safety. In the 2017 case of Sabu Mathew George v. Union of India, the apex court issued interim orders directing Google, Microsoft and Yahoo to ‘auto-block’ pre-natal sex determination ads from appearing in search results ‘based on their own understanding’. The decision contradicts Shreya Singhal wherein the Supreme Court had itself observed that enforcing the duty of adjudicating upon the legality of the content on the intermediaries is not just practically cumbersome but also constitutionally flawed.

The committee set-up in the ongoing In re: Prajwala matter to propose solutions for controlling the proliferation of sexually abusive content on the internet has recommended the usage of technologies like hashing for combating this challenge. Though hashing may be successful in checking the spread of sexually offensive content, its modus operandi of scanning user generated information to match with the content existing on its database to identify CSAM is not just privacy infringing but can also be used to stifle dissent.

Similar jurisprudence of compromising with Right to Privacy and Free Speech to promote online safety is also emerging from the high courts in India. Recently, the Delhi High Court in Ms. X v. State (2020) issued an order stating that acting on CSAM will be a prerequisite for intermediaries to avail the safe harbour protection. Despite the existence of appropriate criminal laws under the POCSO Act, making the curtailment of CSAM, an essential for availing safe harbour is quite concerning.

The way forward: Protecting safe harbour to ensure safety

Though viewed as binaries, privacy and safety are two sides of the same coin. Empowering the citizens with the Right to Free Speech and privacy is critical for ensuring not just online safety but also national security. The American experience has already demonstrated that creating overarching exceptions to the safe harbour protections for promoting online safety is in fact counterproductive as they lead to making the internet more unsafe for women, children and people from the marginalised communities. A similar opinion has echoed from prominent international voices like UNICEF and the U.N. Special Rapporteur on Freedom of Speech and Expression who have noted the importance of privacy to ensure online child safety.

It is critical that India draws crucial takeaways from the American intermediary liability regime and focuses on building the capacity of the investigative agencies through meaningful collaborations between the big techs, law enforcement agencies and academia. Such measures will be helpful in devising innovative solutions for combating the child safety challenges. While legislations akin to FOSTA-SESTA and The EARN IT and LAED Acts should be avoided, initiatives like the recently-passed American Invest in Child Safety Act should be encouraged to render greater economic support to the LEAs to ensure effective investigation and timely prosecution of CSAM proliferators.

Shruti Shreya works at The Dialogue as a policy research assistant. Pranav Bhaskar Tiwari is the programme manager for intermediary liability & encryption at The Dialogue. This article was first published in The Print.